AI Revolution and how to leverage it

We’re 6 months out from what will forever be considered the kick off of the Artificial Intelligence revolution, the launch of chatGPT by OpenAI. ChatGPT not only works (and worked) extremely well when it was launched, but the clean design and interface it was wrapped in made it so that it is incredibly easy to use.

If you’re feeling overwhelmed with all the releases coming out in AI, don’t worry, you’re not alone. Even those of us that are heads down, reading and building are finding it hard to catch up with everything coming out.

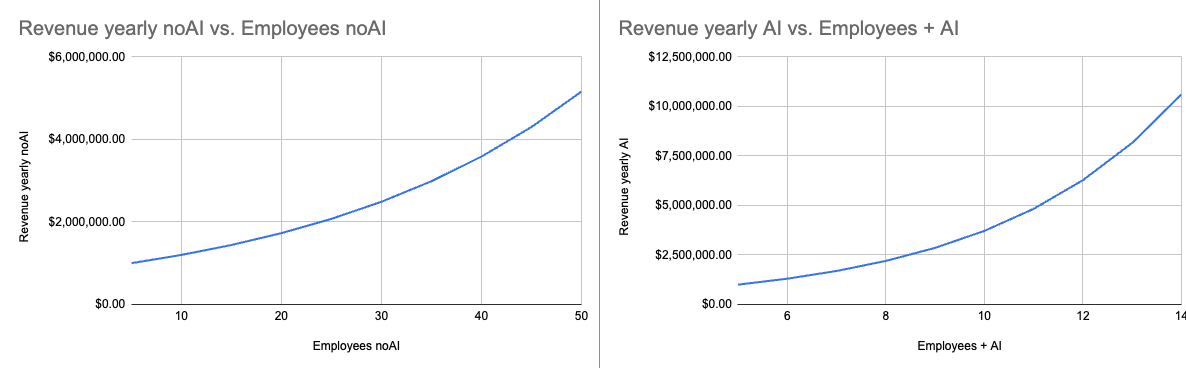

At same time if your research team in your organization isn’t actively working on how to embed these technologies into your organization business workflow, you will struggle to keep up with the pace of organizations that end up leveraging these tools. It’s not about firing people or someone losing their job, it’s about making all your people 10x engineers/accountants/designers/writers/

From audio,image and video generative models, chat/text models (LLMs - Large Language Models) to now the wave of agents being created, there is a lot going on.

This blogpost will walk through some of these technologies and how to leverage it in your day to day. AI will make some jobs obsolete, but will also allow people that leverage it to become 10x workers and will create new jobs that we can’t even imagine right now (how many of you would have said prompt engineer was a job 1/2 years ago?).

LLM - Large Language Models

You can think of an LLM as a parrot with a lot of memory, because that is exactly what these models are. They get trained on large amounts of text data (books, articles, papers, websites, etc…) and then use that knowledge to help answer questions, provide suggestions, create content, have a conversation.

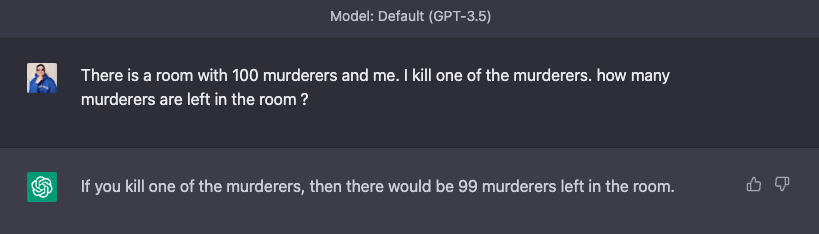

It’s easy to fall under the illusion that these models appear to have “conscience” or that they are “alive”. But the reality is that these models are choosing word over word which has the highest probability to show up after the previous. Reasoning is something that is being improved on the models, a great example of the difference between GPT 3.5 and GPT 4.0 can be seen underneath.

A question posed to GPT 3.5 vs GPT 4

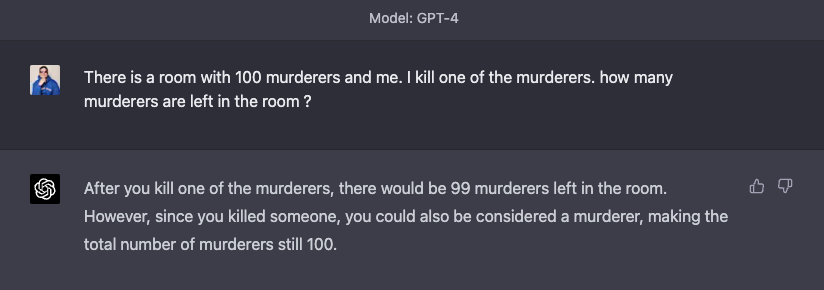

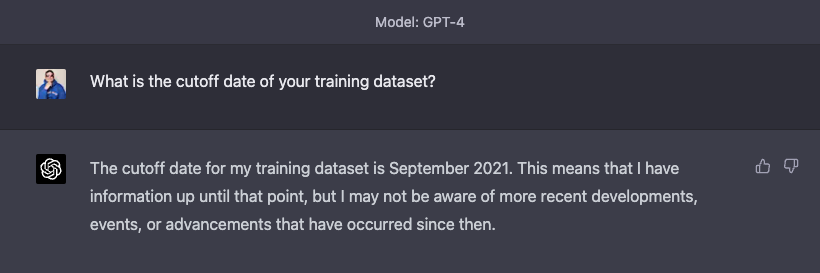

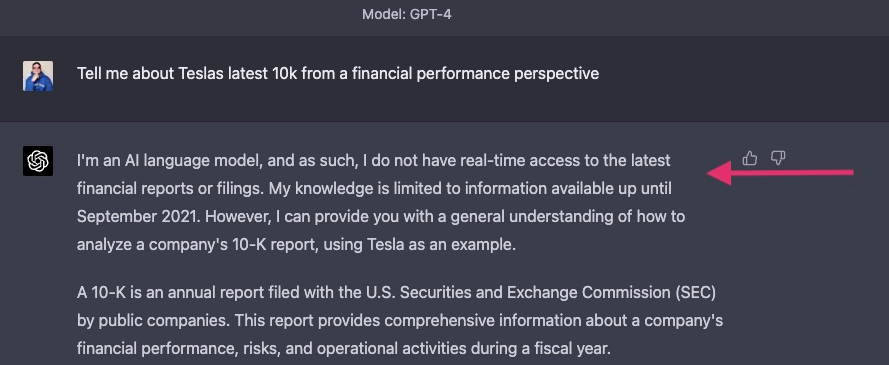

The models also have cut off dates on the knowledge they were trained on. ChatGPT will tell you as much if you ask!

So does that mean you can’t use it for modern events? or for your own data?

Think again! In comes LangChain 🦜️🔗

LangChain is a framework that allows integration with multiple LLMs to develop applications with LLMs. LangChain allows you to easily create embeddings based on your content, to create agents or chains (sequences of calls) between LLMs and other tools. But to understand this we must first understand what is an embedding?

Embeddings are numerical representations of words, phrases or other language elements. In a simplistic way you can think of them in a similar way to how when we write code, the compiler translates it to machine-code so that machines can understand it. This is the equivalent to machine learning models needing to understand language. This essentially allows us to convert unstructured data into a structured form.

But how does this relate to LangChain and all the discussion above? Let’s dive into an example!

As a first example, we ask GPT-4 to tell us about the latest 10K form (for the year 2022). As we know from our discussion above, chatGPT has a cutoff date of September 2021, so the response is what we expect:

We know we can download the latest 10K form for Tesla from the SEC website.

We download the content of the page into a tile called tsla-2023-10k.txt, now lets write some code.

What the following code does is grab the content of the tsla-2023-10k.txt file, send it to openAI in chunks to generate the embeddings and then stores it in Pinecone (a vector database which is fancy talk for a database specialized for storing embeddings).

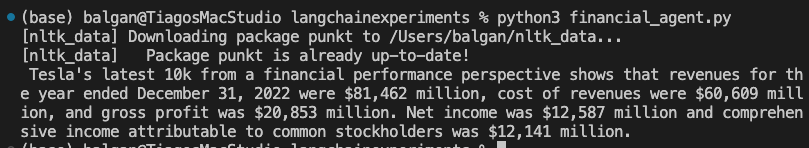

It then asks chat GPT a query (“Tell me about Teslas latest 10k from a financial performance perspective”) by looking at our Pinecone index for similar content related to our question and only passing similar content to openAI, essentially imagine copy pasting parts of the 10K form into chat GPT and saying “based on the content above tell me about this 10K form”.

With the output looking like this:

And this is how you can have your own documentation/content being used by chatGPT to answer your questions as a simple example.

Agents

Another great functionality of LangChain are Agents. Agents are essentially the use of LLMs to make decisions, take actions, observing the result of said action and continue until the original task that was given to them is concluded. This is an area that is growing fast, Yohei Nakajima, published in March an open source framework called “Baby AGI” (AGI stands for Artificial General Intelligence) which uses agents to complete a task. I grabbed his framework and modified the agents to use LangChain and gave it access to three tools, Google, python REPL and bash.

I then gave it an objective and first task:

Objective: behave as a cyber insurance underwriter and find which possible issues might lead to a cyber insurance claim.

First task: Learn about cyber insurance coverages and then by using available tools discover which issues might exist with www.linkedin.com

The results were interesting to observe, as the agent only ran for about 2 minutes and all of a sudden it knew it needed nmap to identify issues, and started using nmap to identify open ports. Although clearly still in an early phase, one can easily see where this techology will go in the next few months /years.

Using @yoheinakajima framework as a foundation, I then transformed the agents into @LangChainAI agents and gave them access to google search, python and bash, and told it to become a cyber underwriter. Pretty cool to see what it does with just a few minutes running... #infosec pic.twitter.com/Hk5QA33PeS

— Tiago Henriques (@Balgan) April 4, 2023

If you’re a founder, working on a marketplace for agents and tools for different frameworks is a great opportunity! At some point soon, we will have something similar to Zapier for AI agents.

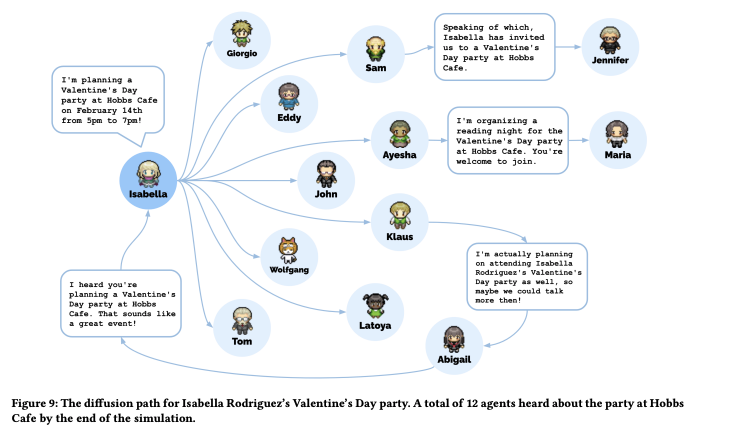

Google and Stanford recently put out a paper where they allowed 25 agents to interact in a simulated world while giving them memory and allowing them to recall, communicate, observe and reflect, the results are astounding.

They observed emergent social behaviors such as information diffusion where one agent announced they were planning a Valentine’s day party and other agents started spreading that information during conversations.

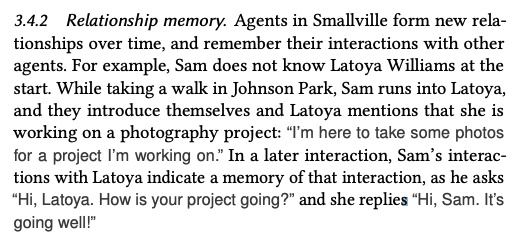

Much like the real world, a number of agents skipped the party because they had other plans and others just didn’t show. They recall information about each other as one agent meets another in the park and tells him she is working on a photography project, later when they meet again he asks her about the project.

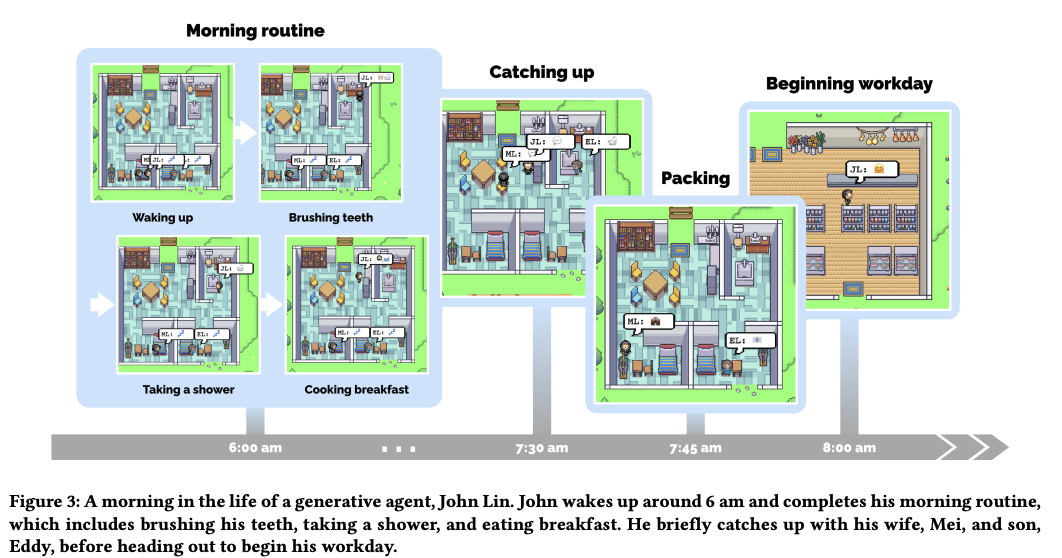

The agents built life patterns. In the morning, John Lin always follows his morning routing of getting up, brushing his teeth, taking a shower, eating breakfast and talking to his son and wife.

What if we creates simulated scenarios related to jobs or something we wanted these agents to become really good at? What would we learn or observe?

Maybe Westworld isn’t that far away….

Not just text!

AI techniques and technologies are evolving at an extremely fast pace. From image and sound generation, many industries are about to be disrupted. Another writing on the wall is how we will see scams increase overtime due to the existence of these tools.

From voice cloning to deep fakes, these technologies are improving in quality while decreasing the entry barrier for scammers. I was on NBC talking about this just a few weeks ago as seen in the following clip:

In less than 30 minutes I was able to pull some clips from the journalist Emilie Ikeda from youtube, we then called one of her colleagues and got her to give us her corporate credit card (none of it was staged, we actually had a first attempt that failed), and while it wasn’t perfect either, it worked, and will only get better from here.